Chapter 1 - Desperately Seeking Patterns

Ever wondered how your brain instantly recognizes your friend's face in a crowded room, or how a machine can tell the difference between a cat and a dog in a photo? Welcome to the fascinating world of pattern recognition - the fundamental skill that both humans and machines desperately seek to master.

The Universal Quest for Patterns

Think about your morning routine. You wake up, recognize the alarm sound, identify your coffee mug among others, and automatically navigate to the bathroom without bumping into walls. All of these seemingly simple tasks involve one crucial ability: pattern recognition.

But here's the mind-blowing part - you're not consciously thinking about any of this. Your brain is constantly, effortlessly finding patterns in the chaos of sensory information bombarding you every second.

How Animals and Humans Master Pattern Recognition

Let's start with a simple example. Imagine you're walking in a forest and suddenly freeze. Why? Because somewhere in the rustling leaves, your brain detected a pattern that screamed "DANGER!". Maybe it was the particular way shadows moved, or a sound frequency that didn't belong. This isn't magic - it's millions of years of evolution fine-tuning our pattern detection systems.

Animals do this too, often better than us. A mother bird can spot her chick among hundreds of others. A dog can recognize its owner's car from blocks away just by the engine sound. These aren't learned behaviors in the traditional sense - they're the result of neural networks (yes, biological ones!) that have been optimized over countless generations.

🤔 Question to Ponder: What patterns do you unconsciously recognize every day that you've never really thought about?

The Machine Learning Challenge: Teaching Silicon to See

Now here's where it gets interesting. If biological brains are so good at pattern recognition, why can't we just... copy them?

Well, that's exactly what early AI pioneers tried to do. But first, they had to understand: What exactly are patterns?

Think of patterns as:

Linear equations that describe relationships between data points

Supervised learning scenarios where we show machines examples with correct answers

Regression problems where we predict outcomes (like house prices) based on input features (like size, location, age)

Imagine you're teaching a friend to estimate pizza delivery time. You might say: "Generally, it takes about 20 minutes base time, plus 2 minutes for every mile of distance, plus 5 extra minutes if it's raining." That's essentially what we're teaching machines - mathematical relationships hidden in data.

The Birth of Artificial Intelligence: McCulloch and Pitts

In 1943, two brilliant minds - Warren McCulloch (a neuroscientist) and Walter Pitts (a logician) - published a groundbreaking paper: "A Logical Calculus of the Ideas Immanent in Nervous Activity"

Sounds intimidating? Let me break it down with an analogy.

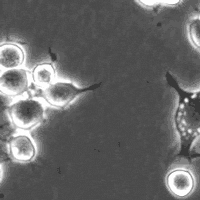

Imagine a biological neuron as a simple decision-maker sitting at a desk. This neuron gets messages (inputs) from other neurons, and based on these messages, it either gets excited enough to "fire" (send a signal) or stays quiet.

The McCulloch-Pitts (MCP) neuron was like creating a super-simplified version of this:

Takes any arbitary number of binary inputs (x1, x2, ... , xN) (think: 0 or 1, like on/off switches)

Produces exactly one binary output (y) (either 0 or 1)

Uses a simple rule to decide: "If enough inputs are 'on', then I'll turn 'on' too". In mathematical terms, output will be 1 if function of inputs is greater than threshold, else it will be 0.

Building Logic with Artificial Neurons

Here's the cool part - you could actually build basic computer logic gates using these artificial neurons! Want an AND gate? Set up an MCP neuron that only fires when BOTH inputs are 1. Want an OR gate? Make it fire when ANY input is 1.

But there was a catch - and it was a big one. The MCP neuron was like having a calculator that could do math, but you had to manually tell it every single rule (i.e. manually configure the threshold value). It couldn't learn these rules by itself.

🤔 Question to Ponder: If you had to manually program every decision rule for recognizing faces, how many rules do you think you'd need?

Frank Rosenblatt's Revolutionary Leap: The Perceptron

Enter Frank Rosenblatt in 1958, a psychologist who was inspired by both McCulloch-Pitts neurons and Donald Hebb's revolutionary idea about learning.

Hebb's Insight: "Neurons that Fire Together, Wire Together"

Donald Hebb, a Canadian psychologist, had a eureka moment about how brains actually learn. He realized that when neurons consistently activate together, their connection gets stronger. It's like a path in the forest - the more it's used, the more defined it becomes.

This concept, known as Hebbian learning, can be explained with a simple analogy:

Imagine you're learning to play guitar. At first, your fingers fumble around the fretboard. But as you practice the same chord over and over, the neural pathways controlling those specific finger movements get stronger and more automatic. That's Hebbian learning in action!

The Mark 1 Perceptron: A Learning Machine

Rosenblatt took these insights and created something revolutionary - the Mark 1 Perceptron. This wasn't just another calculator; it was a machine that could actually learn.

Here's what made it special:

Unlike the MCP neuron:

Inputs (x1, x2, ... , xN) didn't have to be just 0 or 1 (they could be any numbers)

It used weighted sums (w1, w2, ... , wN) (some inputs mattered more than others)

It had a bias term (b) (like giving the neuron a starting opinion)

Output (y) was either -1 or +1 (instead of 0 or 1)

Most importantly: The weights could be automatically adjusted based on experience!

A Real-World Example: The Obesity Classifier (Binary Classification)

Let's make this concrete with a relatable example. Imagine you're building a perceptron to determine if someone is obese based on their weight and height.

Your perceptron might learn something like:

The beauty is that the perceptron learns these weights (0.7, -0.4) and the bias by looking at thousands of examples. It's like having a student who gets better at estimation by seeing more and more examples.

🤔 Question to Ponder: What other simple classification problems do you think a perceptron could solve? What about complex ones it might struggle with?

The Geometric Beauty of Learning

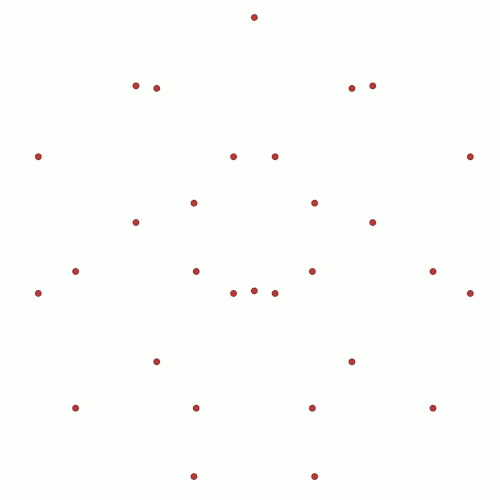

Here's where it gets visually stunning. When a perceptron learns, it's essentially finding the best line (or in higher dimensions, a hyperplane) to separate different categories of data.

Think of it like this: Imagine you're looking down at a basketball court where Team A players are wearing red jerseys and Team B players are wearing blue jerseys. A perceptron's job is to draw a line that separates all the red jerseys on one side from all the blue jerseys on the other side.

The weights determine the slope of this line, while the bias determines how far the line is from the center of the court.

The Mark 1's Impressive Achievement

Rosenblatt's Mark 1 Perceptron was no toy. It had:

400 inputs (from a 20×20 pixel image)

Ability to categorize letters of the alphabet from images (Binary classification like X or E, square or circle etc.)

Self-adjusting weights that improved with experience

When this machine learned to recognize letters, it wasn't storing the letters themselves. Instead, it was storing knowledge in the strengths of its connections - just like how your brain stores the skill of riding a bicycle not as a set of instructions, but as refined neural pathways.

The Mathematical Guarantee

Here's what made the perceptron truly revolutionary: Rosenblatt and his colleagues proved mathematically that a perceptron would always find the correct separating line if the data could be separated by a straight line (what we call "linearly separable" data).

This was like proving that a student would always learn to solve a particular type of math problem, given enough practice and the right teaching method.

But here's the catch - not all real-world problems are linearly separable. Sometimes you need a curved line, or multiple lines, or something far more complex. It's like trying to separate mixed nuts with a single straight cut - sometimes it works, sometimes it doesn't.

The Philosophical Question

As we wrap up this journey through the early days of machine learning, we're left with a profound question that Rosenblatt himself pondered:

Is identifying correlations and patterns the same thing as thinking and reasoning? 🤔

When your brain recognizes a face, is it truly "understanding" that face, or is it just incredibly good at pattern matching? When a perceptron correctly classifies letters, is it "reading," or is it performing sophisticated statistical analysis?

The Legacy: From Perceptrons to Modern AI

The humble perceptron, born in 1958, laid the foundation for everything we see in AI today:

Large Language Models like GPT that can write and reason

Self-driving cars that navigate complex environments

Medical diagnosis systems that spot patterns doctors might miss

Recommendation algorithms that know what movie you'll like

Every time you use voice recognition, translate text, or get a personalized recommendation, you're benefiting from the lineage of ideas that started with those first artificial neurons desperately seeking patterns in data.

Questions for Reflection

As you go about your day, consider these thought-provoking questions:

🧠 Pattern Recognition in Daily Life: What patterns do you recognize automatically that you've never consciously thought about? How might a machine struggle with these same patterns?

🤖 Learning vs. Programming: Is there a fundamental difference between a machine learning a pattern from data versus a human explicitly programming that pattern? What are the implications?

🎯 The Correlation Question: When an AI system identifies a correlation (like "people who buy bread also buy milk"), is it understanding anything meaningful, or just processing statistics? Where do we draw the line?

🔮 Future Implications: If pattern recognition is fundamental to intelligence, what new types of patterns might future AI systems discover that humans have never noticed?

⚖️ The Bias Problem: Since perceptrons learn from examples, what happens when those examples contain human biases? How do we ensure fair pattern recognition?

Conclusion: The Never-Ending Quest

The story of machine learning is ultimately a story about the universal quest for understanding patterns in our complex world. From the first McCulloch-Pitts neuron to today's sophisticated AI systems, we've been on a journey to capture the essence of learning itself.

But perhaps the most beautiful aspect of this journey is that we're still desperately seeking patterns - not just in data, but in understanding what it truly means to learn, to think, and to be intelligent.

As you leave this exploration, remember that every time you effortlessly recognize a friend's laugh, predict the weather from cloud patterns, or understand the emotion in someone's voice, you're performing feats of pattern recognition that still inspire and challenge the brightest minds in artificial intelligence.

The quest continues, and in many ways, we're all still desperately seeking patterns - both human and machine, biological and artificial, working together to decode the mysteries of intelligence itself.

Last updated